It’s been almost a year since the emergence of “AI art” platforms (it’s neither truly “AI” or art), and in that time artists have had to sit back and watch helplessly as their creative works have been sucked up by machine learning and used to create new images without either credit or compensation.

Now, though, a team of researchers—working with artists, some of whom featured in my story from last year—at the University of Chicago have come up with something that it’s hoped will allow artists to take active steps to protect their work.

It’s called Glaze, and it works by adding a second, almost invisible layer on top of a piece of art. What makes the whole thing so interesting is that this isn’t a layer made of noise, or random shapes. It also contains a piece of art, one that’s roughly of the same composition, but in a totally different style. You won’t even notice it’s there, but any machine learning platform trying to lift it will, and when it tries to study the art it’ll get very confused.

Glaze is specifically targeting the way these machine learning platforms have been able to allow their users to “prompt” images that are specifically based on a human artist’s style. So someone can ask for an illustration in the style of Ralph McQuarrie, and because those platforms have been able to lift enough of McQuarrie’s work to know how to copy it, they’ll get something that looks roughly like the work of Ralph McQuarrie.

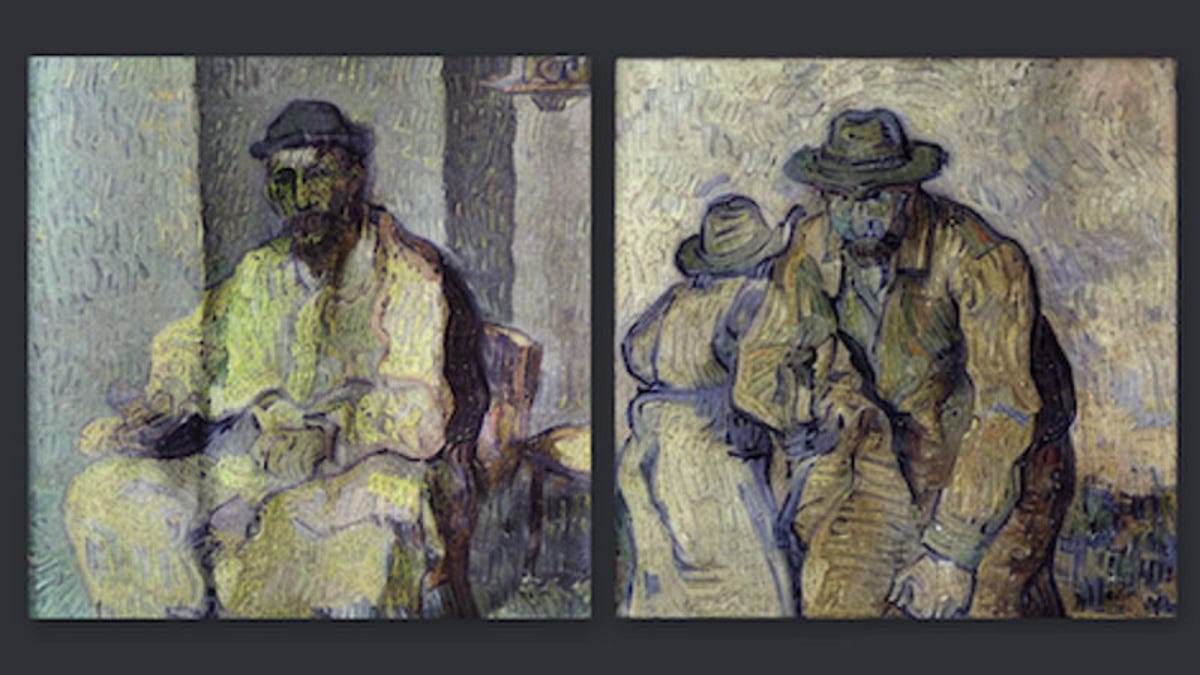

By covering a piece of art with another piece of art, though, Glaze is throwing these platforms off the scent. Using artist Karla Ortiz as an example, they explain:

Stable Diffusion today can learn to create images in Karla’s style after it sees just a few pieces of Karla’s original artwork (taken from Karla’s online portfolio). However, if Karla uses our tool to cloak her artwork, by adding tiny changes before posting them on her online portfolio, then Stable Diffusion will not learn Karla’s artistic style. Instead, the model will interpret her art as a different style (e.g., that of Vincent van Gogh). Someone prompting Stable Diffusion to generate “artwork in Karla Ortiz’s style” would instead get images in the style of Van Gogh (or some hybrid). This protects Karla’s style from being reproduced without her consent.

Neat! Of course this does nothing for the countless billions of images that have already been lifted by these platforms, but in the short term at least, this will finally give artists something they can use to actively protect any new work they’re posting online. How long that short term lasts is anyone’s guess, though, as the Glaze team admit it’s “not a permanent solution against AI mimicry”, as “AI evolves quickly, and systems like Glaze face an inherent challenge of being future-proof”.

If you want to try Glaze out, you can download it here, where you can also read the team’s full academic paper. Below you’ll find an example of the tech in action, with Ortiz’s original painting coming out “borked” when a machine learning platform tried to analyse it.

Leave feedback about this